What is Deep Learning? | How Deep Learning works

- Publised November, 2025

Delve into Deep Learning: uncover its core, compare it to AI/ML, explore applications, and understand its future.

Table of Contents

Toggle

Key Takeaways

- Deep Learning is a subset of Machine Learning within Artificial Intelligence, utilizing multi-layered neural networks to learn patterns from data.

- It stands out for its ability to automatically learn features from raw data, handle unstructured information, and achieve state-of-the-art results in complex tasks.

- Despite its power, Deep Learning faces challenges like high data and computational demands, interpretability issues, and ethical considerations.

What is deep learning?

Deep Learning is a specialized subset of Machine Learning (ML) within Artificial Intelligence (AI). It uses multi-layered artificial neural networks, inspired by the human brain, to learn patterns from vast amounts of data. The term “deep” refers to the numerous hidden layers within these neural networks, enabling the system to learn intricate and hierarchical representations. This technology powers intelligent voice assistants, self-driving cars, and generative AI, marking a significant leap in technological innovation.

Decoding the AI Hierarchy: Deep Learning vs. Machine Learning vs. Artificial Intelligence

Artificial Intelligence, Machine Learning, and Deep Learning are related but distinct concepts, forming a hierarchy where AI is the broadest category, followed by ML, with DL as a specialized subset.

- What is Artificial Intelligence (AI)? Artificial Intelligence refers to the overarching concept of machines performing tasks that typically require human intelligence. These tasks include problem-solving, learning, reasoning, and perception. AI aims to create systems that can mimic human cognitive functions.

- What is Machine Learning (ML)? Machine Learning is a subset of AI focused on enabling systems to learn from data without explicit programming. ML algorithms identify patterns, make predictions, and improve their performance over time. Unlike traditional programming, ML systems learn from data, adapting to new information without direct human intervention.

- What is Deep Learning (DL)? Deep Learning is a subset of Machine Learning that uses artificial neural networks with multiple layers (hence “deep”) to analyze data. DL excels at automatically learning complex features from raw, unstructured data. This capability eliminates the need for manual feature engineering, making it highly effective for tasks like image recognition, natural language processing, and speech recognition.

In summary, Deep Learning’s ability to automatically learn features and handle unstructured data makes it uniquely suited for solving complex, data-rich problems compared to traditional Machine Learning approaches. Understanding these distinctions is crucial for leveraging the right tool for specific AI applications.

The Inner Workings of Deep Learning: How Neural Networks Learn

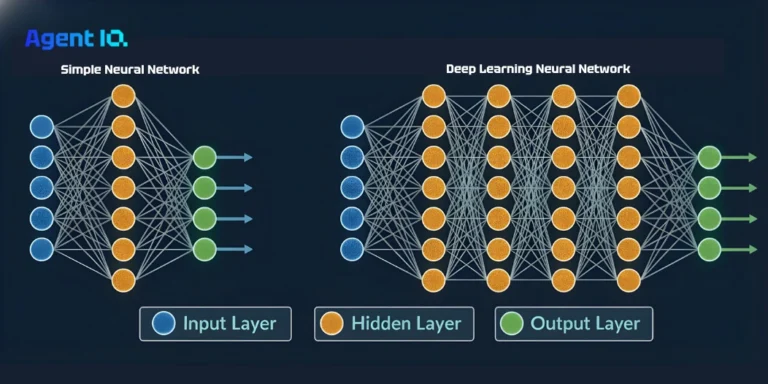

Deep Learning models are built upon Artificial Neural Networks (ANNs), inspired by the structure and function of the human brain. These networks form the foundation for how deep learning models learn and make predictions.

Artificial Neural Networks (ANNs): The Foundation

Artificial Neural Networks consist of interconnected nodes (neurons) organized in layers. These neurons process information and identify patterns by passing data through connections, which are adjusted during the learning process. The architecture of ANNs allows for complex data analysis and pattern recognition.

Key Components of a Deep Neural Network

- Layers: Deep Neural Networks consist of three types of layers:

- Input Layer: Receives the initial data.

- Hidden Layers: Perform the majority of the processing through multiple layers, enabling hierarchical learning. The “depth” of the network refers to the number of hidden layers.

- Output Layer: Produces the final result or prediction.

- Neurons (Nodes): These are the basic processing units within each layer, receiving inputs, performing calculations, and passing the results to the next layer.

- Weights & Biases: Weights determine the strength of the connections between neurons, while biases set the activation thresholds. These parameters are adjusted during training to optimize the network’s performance.

- Activation Functions: These functions introduce non-linearity, allowing the network to learn complex patterns. Common examples include:

- ReLU (Rectified Linear Unit): A widely used activation function that outputs the input directly if it is positive, and zero otherwise.

- Sigmoid: Outputs values between 0 and 1, useful for binary classification.

- Tanh (Hyperbolic Tangent): Outputs values between -1 and 1, centering the data around zero.

The Learning Cycle: How Deep Learning Models Train

- Forward Propagation: Data is fed into the input layer, passes through the hidden layers, and produces a prediction at the output layer.

- Loss Function: The loss function measures the error between the predicted output and the actual target. Common loss functions include Mean Squared Error (MSE) and Cross-Entropy.

- Backpropagation: The error is propagated backward through the network. The gradients (derivatives) of the loss function with respect to the weights and biases are calculated.

- Optimization (Gradient Descent): The weights and biases are iteratively adjusted to minimize the loss function using optimization algorithms such as Gradient Descent. Variants include Batch Gradient Descent, Mini-batch Gradient Descent, and Stochastic Gradient Descent.

Defining Features: Key Characteristics of Deep Learning

Deep Learning’s capabilities are defined by several distinct features, contributing to its unparalleled power and broad applicability.

- Automatic Feature Learning: Deep learning models automatically extract and learn hierarchical features directly from raw data. This eliminates the need for manual feature engineering, saving time and resources.

- Superior Pattern Recognition and Associations: Deep Learning excels at identifying intricate patterns and making human-like associations, even in unstructured or unlabeled data.

- Versatility in Learning (Supervised & Unsupervised): Deep Learning can perform well with both labeled (supervised) and unlabeled (unsupervised) data, leveraging abundant unlabeled datasets to improve model accuracy.

- High Accuracy and Performance: Deep Learning achieves state-of-the-art results across various complex tasks, including image recognition, natural language processing, and speech recognition.

- Data and Computational Demands: Deep Learning requires large datasets and significant computational resources (GPUs/TPUs) for effective training. This is a key consideration for implementation.

- The “Black Box” Nature: The complex, multi-layered structure of deep learning models makes it difficult to understand the decision-making process, posing challenges for interpretability.

Architectures of Intelligence: Common Types of Deep Neural Networks

Different Deep Learning architectures are specialized for distinct data types and problem domains, enabling them to address a wide range of tasks efficiently.

- Convolutional Neural Networks (CNNs): CNNs are designed for processing visual data, such as images and videos. They use convolutional layers for feature extraction and pooling layers for dimensionality reduction. Common applications include image recognition, object detection, and facial recognition.

- Recurrent Neural Networks (RNNs) & Variants (LSTMs, GRUs): RNNs are used for processing sequential data, such as time-series and natural language. They have a “memory” of past inputs through recurrent connections. Long Short-Term Memory (LSTMs) and Gated Recurrent Units (GRUs) are variants that mitigate the vanishing gradient problem. Applications include speech recognition, machine translation, and natural language generation.

- Transformer Networks: Transformer Networks have revolutionized sequence-to-sequence tasks, particularly in Natural Language Processing (NLP). They use a self-attention mechanism, allowing parallel processing of the input sequence. Applications include Large Language Models (LLMs) and advanced machine translation.

- Generative Adversarial Networks (GANs): GANs are designed to generate new, realistic data, such as images, audio, and video. They consist of a generator and a discriminator in adversarial competition. Applications include image generation, style transfer, and data augmentation.

Deep Learning in Action: Real-World Applications

Deep Learning is transforming industries and daily life, with applications spanning a wide range of sectors.

- Computer Vision & Image Analysis: Deep Learning powers facial recognition, object detection (as used in self-driving cars), and medical imaging diagnostics, improving accuracy and efficiency.

- Natural Language Processing (NLP): Deep Learning enables machine translation, chatbots, sentiment analysis, Large Language Models (LLMs) like ChatGPT, and text summarization, enhancing communication and information processing.

- Speech Recognition & Audio Processing: Deep Learning drives voice assistants (Siri, Google Assistant), voice command systems, and transcription services, making technology more accessible and user-friendly.

- Healthcare & Medicine: Deep Learning aids in drug discovery, disease diagnosis, and personalized treatment, leading to more effective healthcare outcomes.

- Finance & Business Intelligence: Deep Learning is used for fraud detection, algorithmic trading, and customer service automation, improving efficiency and security in financial operations.

- Autonomous Vehicles & Robotics: Deep Learning enables perception, navigation, and robotic control, advancing the development of self-driving cars and automated systems.

- Generative AI: Deep Learning creates realistic images, text, music, and video, opening new possibilities for creative expression and content generation.

Navigating the Complexities: Challenges and Limitations of Deep Learning

Despite its power, Deep Learning faces significant challenges that must be addressed to ensure its responsible and effective deployment.

- Data Hunger: Deep Learning requires vast amounts of high-quality, often labeled, data to train effectively. This can be a barrier in domains where data is scarce or expensive to acquire.

- High Computational Cost: Training deep learning models demands significant hardware (GPUs/TPUs) and energy, leading to high costs and environmental concerns.

- Lack of Interpretability (“Black Box” Problem): The internal decision-making process of complex deep learning models is often opaque, making it difficult to understand and trust their outputs.

- Bias in Data: Deep learning models can learn and perpetuate biases present in training data, leading to unfair or discriminatory outcomes.

- Ethical Considerations: Deep Learning raises broader societal impacts, such as job displacement, misuse potential, privacy concerns, and accountability issues, requiring careful consideration and regulation.

The Horizon of Intelligence: The Future of Deep Learning

Deep Learning is a rapidly evolving field, promising further transformative changes and advancements in the years to come.

- Continued Advancements in Architectures and Algorithms: The field will likely see more efficient, less data-hungry, and self-supervised learning approaches. Quantum computing may also play a role in accelerating deep learning processes.

- Greater Integration and Hybrid AI: Combining deep learning with other AI paradigms (e.g., symbolic AI, neuro-symbolic AI) could lead to more robust and transparent systems.

- Democratization and Accessibility: Easier access to deep learning tools, frameworks, and cloud resources will broaden adoption, allowing more individuals and organizations to leverage its potential.

- Ethical AI and Responsible Development: Addressing fairness, transparency, and accountability will be crucial, leading to the development of regulatory frameworks and ethical guidelines for AI development.

FAQs

What is deep learning in simple words?

It uses multi-layered artificial neural networks, inspired by the human brain, to learn patterns from vast amounts of data.

What is the difference between AI and deep learning?

Deep Learning is a specialized subset of Machine Learning (ML) within Artificial Intelligence (AI)

Is Deep Learning supervised, unsupervised, or both?

Deep Learning is primarily used in supervised learning but is also capable of unsupervised learning. Reinforcement learning techniques can also be applied.

Your Knowledge, Your Agents, Your Control